AI technology is trained to amass more knowledge and sound more human. This often comes from analyzing large sets of data, whether that comes from millions of books or countless social media posts. There are a few issues that have come up as a result of this. One is that some AI-generated text can exhibit some telltale characteristics — which can, in turn, make it easier to spot. Another, unfortunately, is that some AIs are starting to sound — how best to phrase this? — deeply racist.

In an article for Business Insider, Monica Melton chronicled several ways in which AI systems exhibited racial bias in multiple disquieting ways. Some of this, Melton observes, comes from the fact that the tech world is still largely white and male — which can mean that the ways in which machine learning systems are trained do not account for large portions of the global population.

Unfortunately, this is also far from the first time that these issues have come up. You might recall the time in 2016 when Microsoft debuted a chatbot, Tay, that was designed to learn from users on Twitter. What happened next is best described by The Verge’s headline: “Twitter taught Microsoft’s AI chatbot to be a racist asshole in less than a day.” Tay went offline less than a day after being turned on.

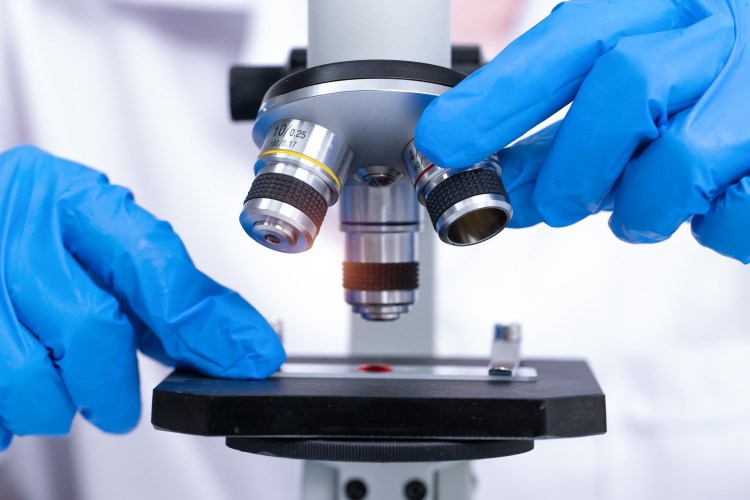

Machine Learning Could Provide a Diagnosis After a Single Cough

A Google AI system has shown promise in testingWhile the technology behind circa-2024 AI is more powerful than what enabled Tay to work, some of the same concerns have endured. A recent update to a 2019 article at IEEE Spectrum cited comments made by Jay Wolcott of the generative AI company Knowbl. “How do you control the content pieces that the [large language model] will and won’t respond to?” Wolcott told IEEE Spectrum. And it creates serious tension between the more utopian vision that AI’s boosters advocate and the more disquieting elements of the most hostile parts of the internet.

This article was featured in the InsideHook newsletter. Sign up now.