There’s been a lot of conversation lately about the current state of search engines. More specifically, there’s been a lot of discourse about the frustrations numerous search engine users have had when trying to seek out basic information. It’s prompted some people to seek out alternatives to Google — or to work on building their own. The addition of AI into the mix has led to further dilemmas, including treating The Onion stories as factual rather than satirical.

But when it comes to the effects of AI-generated images on search engine results, we seem to be on the verge of something utterly terrifying. That’s not hyperbole; the headline for a recent story at 404 Media by Emanuel Maiberg declared that AI images “Have Opened a Portal to Hell” in search engines. That is, unfortunately, an eminently accurate description of the situation at hand.

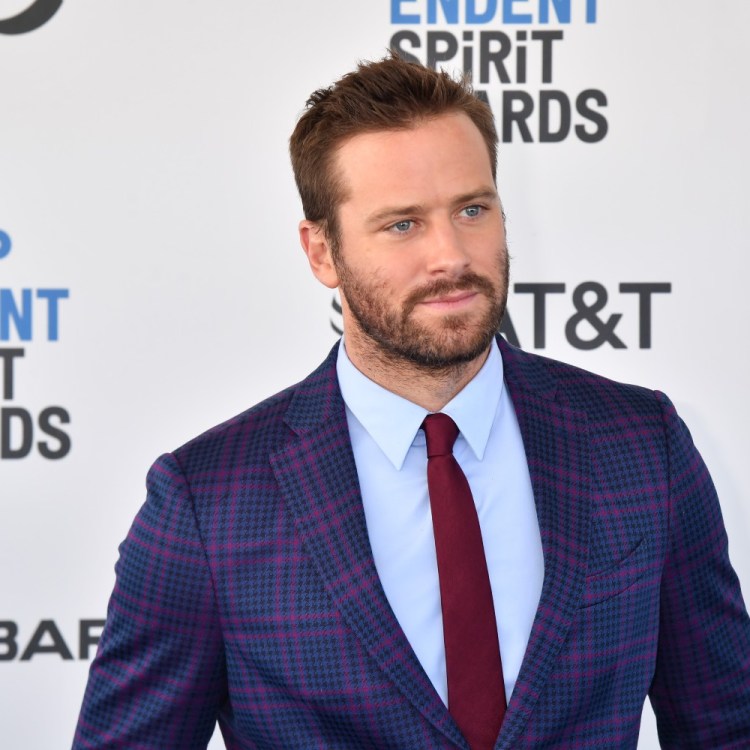

As Maiberg explained, this article grew out of another investigation, this one into scams on Taylor Swift fan pages. While researching AI-generated images for that article, he discovered a number of what appeared to be AI-generated images of Swift. Maiberg then began researching the presence of AI-generated images in search results for different female celebrities wearing swimsuits. That’s when the investigation took an even bleaker turn.

Maiberg wrote that, for two of the celebrities in question, Google Image Search returned “AI-generated images of them in swimsuits, but as children, even though the search didn’t include terms related to age.” Following those links, Maiberg noted, can take users to “AI-generated nonconsensual nude images and AI-generated nude images of celebrities made to look like children.”

Deepfake images have already popped up in ways that increase disinformation about ongoing conflicts around the globe. This latest permutation is unsettling for a much different reason and demonstrates how AI-generated images can both make it harder to seek out actual photographs and take users to thoroughly unsettling places, both literally and metaphorically.

Your New LinkedIn Connection May Be an AI-Generated Dummy

A marketing tactic is making waves…and new facesThere is, unfortunately, a reason why Cory Doctorow’s concept of enshittification has become ubiquitous in the last year or so. In a recent lecture, Doctorow described a process by which “the services that matter to us, that we rely on, are turning into giant piles of shit.” And getting a bunch of dodgy AI images — and worse — when carrying out a fairly basic image search certainly sounds like it qualifies, with a side order of moral rot along the way.

This article was featured in the InsideHook newsletter. Sign up now.