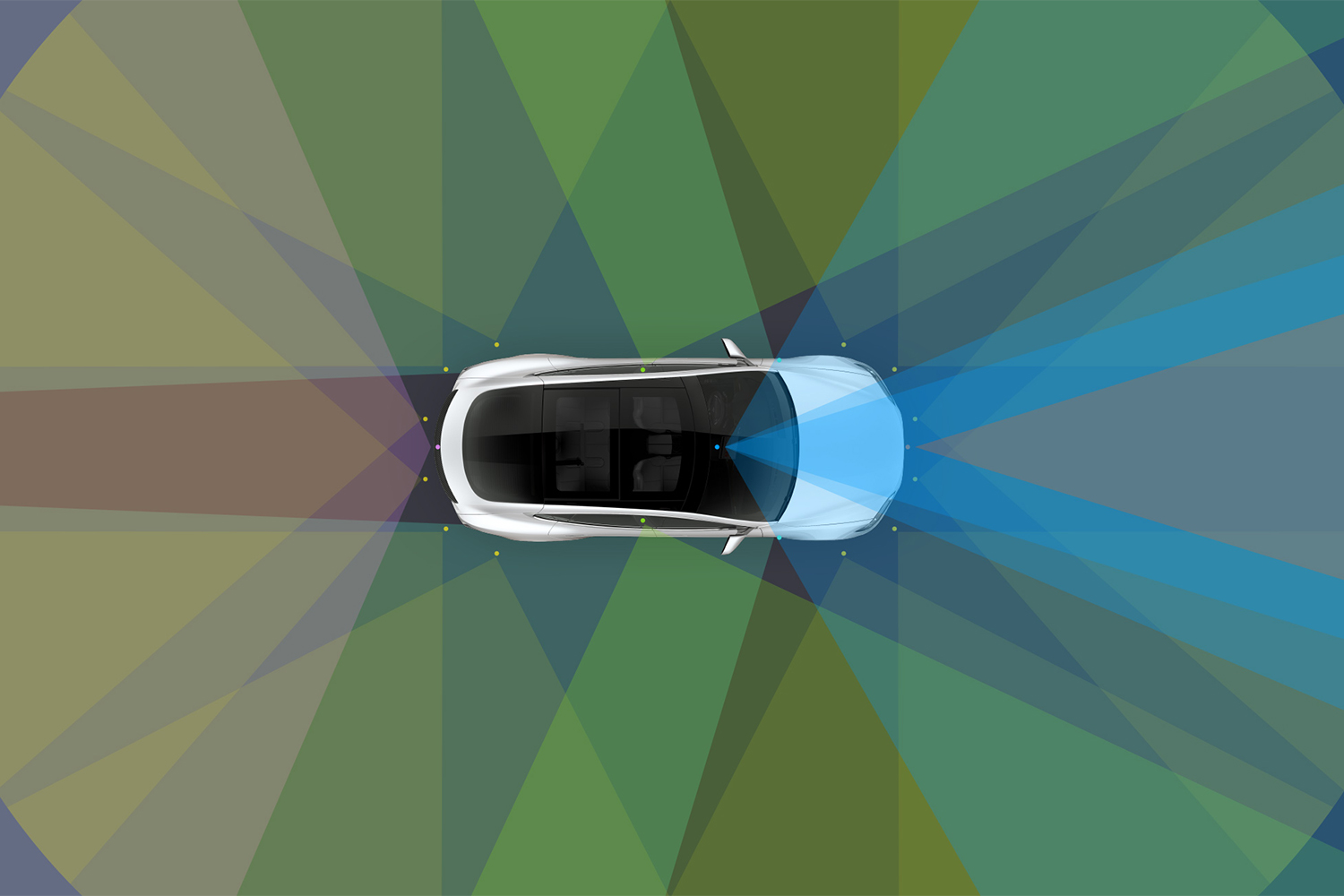

In theory, driver-assistance systems are supposed to make the roads a safer place and minimize the potential for driver error to cause harm. In practice, it isn’t that simple. A recent Car and Driver study made it alarmingly clear that driver-assist systems can be easily fooled and have a number of limitations. And now, one of the highest-profile driver-assist systems is the subject of a federal investigation.

That system is Tesla’s Autopilot. At Engadget, K. Holt has more details on the announcement of the investigation, which is being conducted by the U.S. National Highway Traffic Safety Administration. The NHTSA cited 11 instances where a vehicle running Autopilot or Traffic Aware Cruise Control crashed into a first responder vehicle, beginning in 2018. These crashes resulted in one death and 17 injuries.

According to a document released by the NHTSA providing more details about the investigation, “the crash scenes encountered included scene control measures such as first responder vehicle lights, flares, an illuminated arrow board, and road cones.”

The NHTSA’s Office of Defects Investigation is currently looking into “Model Year 2014-2021 Models Y, X, S, and 3.” Engadget’s report notes, per Bloomberg, that that group of vehicles numbers around 765,000. The potential implications of this investigation are massive indeed — though it’s also worth mentioning that the NHTSA cleared Tesla in an earlier investigation involving Autopilot.

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.