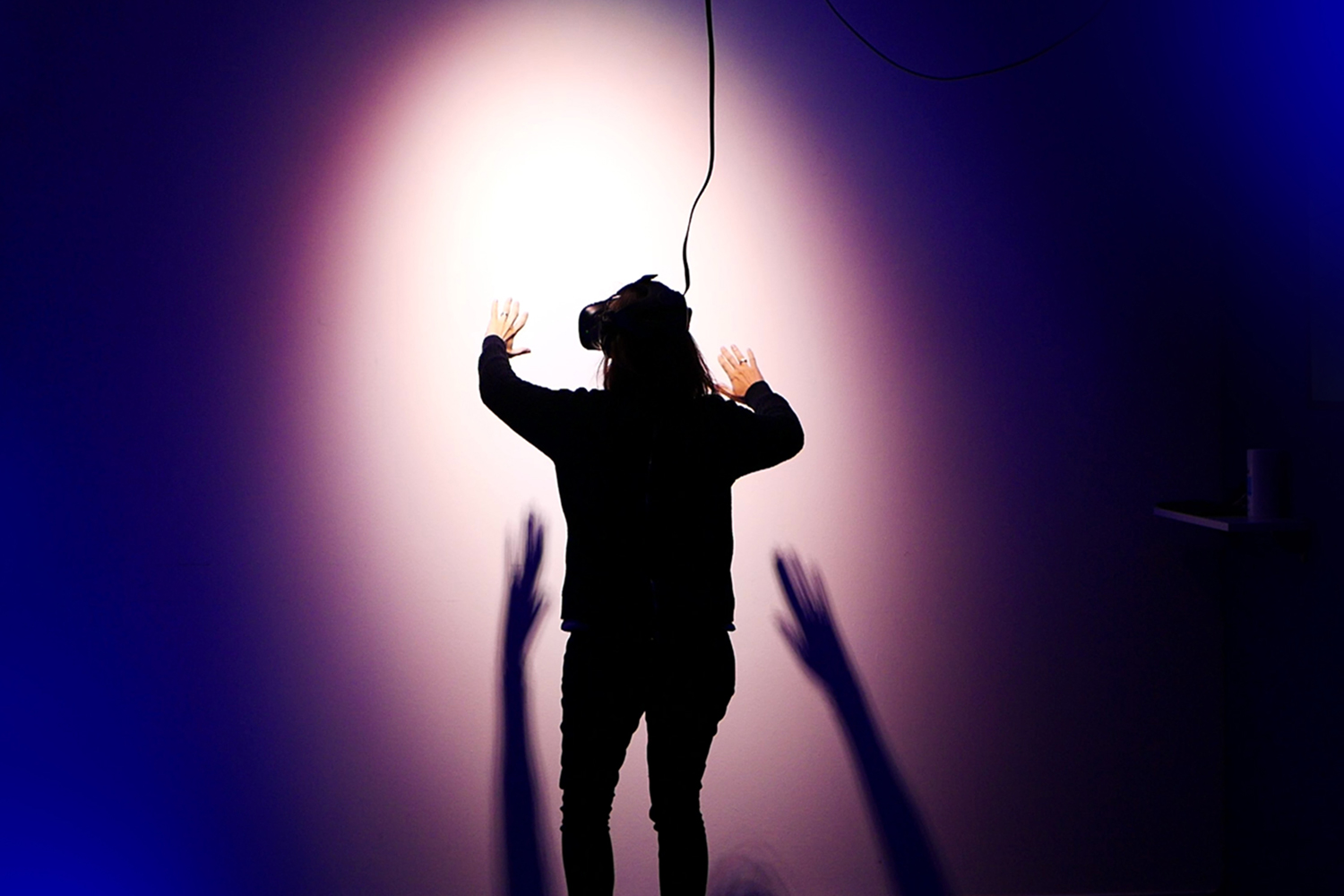

The virtual metaverse is rife with bigotry, harmful content and even reports of virtual groping and rape, according to a new study by the corporate accountability group SumOfUs.

Per Fast Company, the report suggests Meta’s VR platforms (Horizon Worlds and Horizon Venues) have the same issues as other social media sites, but now with the addition of virtual stalking and even assault. “Given the failure of Meta to moderate content on its other platforms, it is unsurprising that it is already seriously lagging behind with content moderation on its metaverse platforms,” notes the report. “With just 300,000 users, it is remarkable how quickly Horizon Worlds has become a breeding ground for harmful content.”

Among the issues in the metaverse (if you need to brush up on what the metaverse is, go here): little oversight or moderation, inadequate processes for reporting problems, a failure by Meta to take action against offenders, and the ease for children to access the platform and encounter harmful behavior. As well, some Horizon Worlds and Venues users have reported everything from virtual groping to one person who claimed she was “virtually gang raped” within a minute of being on the platform. While these attacks are virtual, users can still feel different vibrations in their VR controller according to what’s happening in the metaverse (which creates “a very disorienting and even disturbing physical experience during a virtual assault, as the report suggests).

As the study suggests, this behavior isn’t limited to Meta’s platform but is also prevalent on other apps that you could probably access through Meta’s Oculus Quest VR headset. While there are community standards, there is a lack of content moderators. And Facebook seems fine with this. They’re even taking somewhat of a hands-off approach.

As noted by the researchers: “In a recent blog post, Meta’s policy chief Nick Clegg said Meta is viewing interactions in the Metaverse to be more ‘ephemeral’, and that decisions around moderating content will be more akin to whether or not to intervene in a heated back and forth in a bar, rather than like the active policing of harmful content on Facebook.” While Meta did introduce a four-foot Personal Boundary barrier for its avatars earlier this year (which you can turn off), that doesn’t stop users and even children from the barrage of hate speech and conspiracy theories that users have reported.

The solution? The study’s researchers suggest not allowing Meta to engage in anticompetitive practices, increasing data protection laws, and reigning in Big Tech with legislation that forces changes on how companies target ads and demands more transparency from metaverse companies regarding how algorithms are designed and operated.

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.