Human intelligence currently has some big advantages on the artificial version. For one: as we experience our lives, we naturally learn things. Figuring out how to make computers gain knowledge remains a central challenge for AI. For another: we can recognize when we still have more to learn. This is when the tech world encounters an incredible obstacle: making robots understand uncertainty and acknowledge there are things that still need to be discovered.

Cade Metz explores this topic for Wired. In particular, Metz delves into the statistical model called a Gaussian process (GP). GPs may be key to creating robots who are truly autonomous. Metz writes:

“Fundamentally, Gaussian processes are a good way of identifying uncertainty. ‘Knowing that you don’t know is a very good thing,’ says Chris Williams, a University of Edinburgh AI researcher who co-wrote the definitive book on Gaussian processes and machine learning. ‘Making a confident error is the worst thing you can do.’”

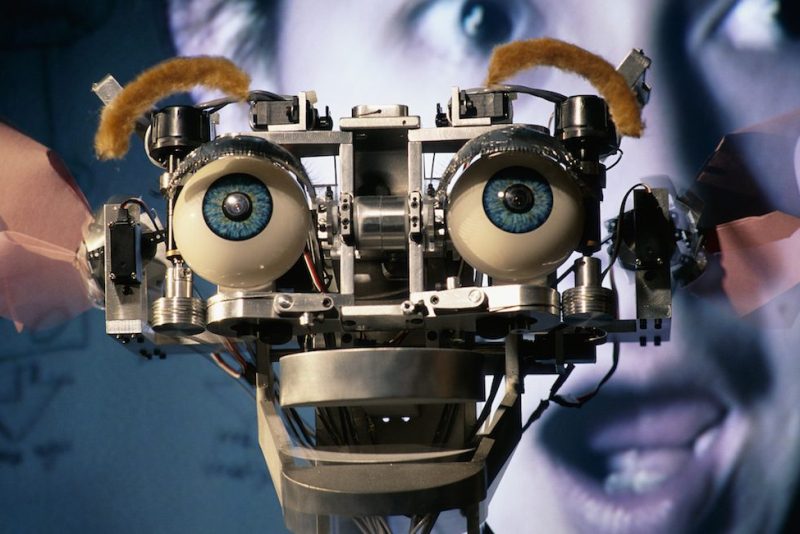

Understand: robots or other computers making confident errors can have horrific implications in the real world. More and more self-driving cars are on the roads. We’ve all had moments when we’re ready to switch lanes, only to take another glance and realize another motorist had been in our blind spot or come out of nowhere behind us. That’s the challenge now: to make computers recognize things that things may not be what they seem. To read more about the future of AI, click here. Below, see more of Kismet, the MIT robot that simulates social intelligence.

—RealClearLife Staff

This article appeared in an InsideHook newsletter. Sign up for free to get more on travel, wellness, style, drinking, and culture.